Even small errors can sink a proposition.

- The internet is agog with OpenAI’s latest creation but once again it clearly demonstrates that it has no idea what it is doing while Air Canada undermines the reason for using any chatbots at all.

Sora

- Sora is OpenAI’s latest model that is capable of generating very realistic video that has made much of the video content creation industry nervous.

- Sora is a text-to-video algorithm that has been trained to understand and simulate the physical world with the long-term intention of creating a system for solving problems that, until now, have required physical interaction.

- In effect, this is an attempt to create a system for digital twins which are simulations of real-world assets like a factory or a building that can be used for testing and design modifications without having to physically build anything.

- OpenAI’s commentary is clear in that it hopes that Sora will be used as a foundation model for users to build their own environments for evaluation of physical world problems.

- This is very much like Nvidia’s Omniverse, except that Nvidia’s offering is created with the rules of the environment being very clearly defined as opposed to Open AIs which uses a large model that it hopes has ingested every possible permutation and combination.

- The visual outputs are very impressive and a huge leap from where video creation was a year ago but once again causality gets in the way.

- For example, the algorithm can draw a chess board with pieces but doesn’t understand anything about the game, it can draw hands but doesn’t know how they work, it can draw dogs but doesn’t realise that they do not spontaneously materialise and then disappear, and it does not know that ants have 6 legs.

- Where Sora really shines is high and wide shots of landscapes and places which would typically be captured using a drone and human operator.

- The details matter in the use case OpenAI is promoting because the lack of causal understanding of the environment being created will mean that any testing done using Sora will be unreliable.

- This is because one can never be sure that the result has not been based on a physical characteristic that has been made up as opposed to something that has been drawn from the real-world dataset.

- Hence, as a starting point, Sora could be quite useful for situations or testing where the subject under examination is limited in scope and specific in nature.

- In this use case, Sora could be fine-tuned for the rules and characteristics of the task meaning that it should perform a lot better but only within the use case that has been defined.

- This looks to me like OpenAI is building up a stable of foundation models upon which it hopes to entice developers to build all their generative AI presumably using a single software development kit or suite of tools.

- I expect everyone else to quickly follow.

Air Canada – Widespread problem.

- Air Canada has dealt a blow to the use of chatbots in customer service by being found liable for the chatbot’s actions in a Canadian Court.

- An Air Canada customer was informed by a chatbot that the bereavement policy was to take a flight after the death of a family member and then claim a refund within 90 days of travel.

- However, Air Canada’s real policy is that it will not provide refunds for bereavement travel after the flight is booked and after a lot of back and forth the user filed a small claim in Canada’s Civil Resolution Tribunal.

- Air Canada attempted to argue that it was not liable for the chatbot’s actions as it was part of a separate legal entity and that the user should never have trusted the chatbot in the first place.

- This argument completely undermines the use case for using chatbots because if they can not be trusted, then there is no point whatsoever in having them in the first place.

- Needless to say, Air Canada lost the case and was forced to refund a portion of the airfare as well as the user’s legal costs.

- Unsurprisingly, the chatbot has been disabled for over 11 months and there is no knowing when it will return.

- This is going to undermine the use of LLMs to power chatbots as companies will either not use them or limit their capabilities so much that they will become fairly useless.

- This is an example of a widespread problem that I see everywhere with AI services that are powered by LLMs.

- This problem is the fact that because LLMs are a black box, it is impossible to control their behaviour in specific functions.

- This leads to control being exerted by curtailing the functionality in its entirety which greatly limits the function of the service and increases frustration of the user.

- This is the equivalent of swatting a fly on a plate with a sledgehammer and greatly reduces the appeal of using LLMs in my opinion.

Take Home Message

- While the demonstrations of these systems look incredible, they continue to fail to live up to any rigorous examination.

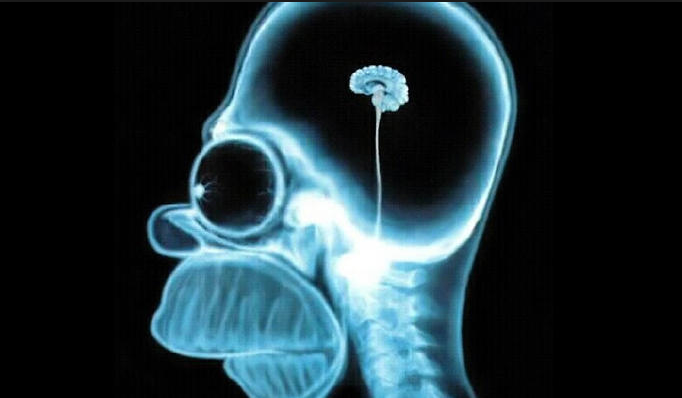

- This is because, despite their apparent sophistication, they are merely statistical pattern recognition systems that are incapable of telling the difference between correlation and causation.

- This weakness is fundamental to their design and has been so for around 40 years.

- This is why I believe that true artificial intelligence will not be achieved until a new method of creating AI is created and this could be decades away.

- However, Mr Son of SoftBank is adamant that true AI will be created within 10 years in exactly the same manner as all of the other visionaries who have made this exact claim over the last 50 years.